Diegesis and Augmented Reality

In the FOX program “Fringe”, each location title (the establishing shot that identifies the site of the following action) “embeds” the place name within the physical space of the shot:

At the same time, Volkswagen is promoting their CC sedan with this commercial:

These examples are preceded by the first time I saw this technique applied (skip ahead to 0:22 for the goods):

David Fincher’s 2002 film “Panic Room”, starring Jodi Foster, opens with ominous music and widescreen shots of the particular cavernous urban spaces Manhattan is known for. The credit text is also embedded within the scene.

This visual effect is made though Motion Tracking, a process now available on many standard video-editing software. Watch this video to see a tutorial on how easy it is to use motion tracking in Adobe After Effects. Motion tracking is a process that analyses the motion recorded in the camera, and then makes markers to allow users to match that motion with additional data.

The curious application in the preceding examples confuses the standard diegetic relationship in narrative visual flow. Diegesis is the fictional time, place, characters, and events which constitute the universe of the narrative. In film and in literature, diegesis usually splits into two categories: diegetic and non-diegetic. Diegetic content is in and of and part of the action as understood by the characters themselves. Non-diegetic content exists outside of that universe. Diegetic text (seen by both audience and characters) can look like this:

Non-diegetic text (seen only by the audience) typically looks like this:

Diegesis applies to a variety of conditions, notably sound, where soundtracks that set the mood of a scene, or voice-overs of a narrator are non-diegetic, and music played by a character, or dialogue between characters, is diegetic. Sometimes comedy can emerge from a confusion or purposeful misdirection:

This longtime relationship between the diegetic and the non-diegetic now enters a new phase, where images like the Fringe location titles and the Panic Room opening are non-diegetic in nature (people on the streets of Manhattan are not looking up at Jodie Foster’s name), but diegetic in execution. Motion tracking automates the projective methods required to simulate the spatial alignments of a digital object inserted into a real scene.

The application of text to seem physical in a scene seems to be a limited stylistic interest, but the emergence of augmented reality has pushed this diegetic question into new realms. Augmented reality has received substantial attention recently as pocket technologies like the iPhone have promised realtime overlay of information onto live imagery. But before we were promised new states of reality, other forms of media have experienced augmentation.

Watching the NFL for the past decade, viewers at home have had more intel than players or coaches on the field. On the field, the lines of scrimmage and 10-yard first downs are labeled with sideline, upright flags. On TV, a virtual, bright yellow line clearly marks the first down threshold:

This line appears mapped onto the field, sticking to the appropriate yard line no matter the camera movement. Motion tracking keeps the relationship stable in real time. Here is a description of how it works. Sportsvision, the inventors of the 1st & Ten System, has a wide range of augmented reality applications in a variety of sports. The non-diegetic line can appear diegetic as players dive for the line for a first down. For other applications of augmented reality, see BMW’s new maintenance paradigm, Georgia Tech’s new game, Popular Science’s landmark cover art, and the original military application that developed the technology, the Battlefield Augmented Reality System (BARS).

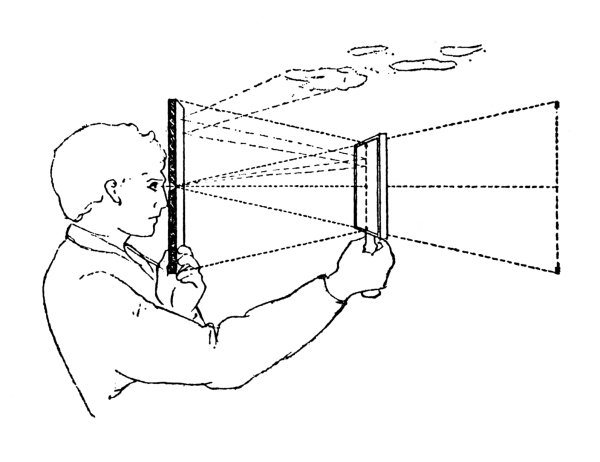

This is an interesting application of digital projection technique, as the computer calculating the overlay needs to establish realtime vanishing points, camera angles, and optical precision. In a way, it is the fulfillment of a promise made by the earliest of perspective demonstrations. In the early 15th century, Filippo Brunelleschi “proved” his method of drawing realistic perspective using a mirror and his painting of the baptistry of Florence:

The painting’s vanishing point was drilled out, allowing the visitor to peer through the point from behind the panel. The viewer stands in front of the real Baptistry with a mirror in between the scene and the panel. Moving the mirror was to demonstrate the perspective’s fidelity through the virtual overlay, through the lack of change between image and the real. Brunelleschi used silver leaf in the sky of the panel, to reflect the sky in a luminous manner, rather than paint static clouds. The demonstration, with the precise alignment of painting and real, with algorithmically derived projector lines to simulate perceptual geometry, is a great leap in the history of virtuality. The addition of the real clouds mirrored in the specular finish, to blend aspects of the real with the virtual simulation, belongs to the ancestry of what would become augmented reality.

About this entry

You’re currently reading “Diegesis and Augmented Reality,” an entry on projection systems

- Published:

- 13 November, 2009 / 12:10 am

- Tags:

No comments yet

Jump to comment form | comment rss [?] | trackback uri [?]